Weekly Link Roundup #895 (actually #59 IFYKYK)

In which we discuss the serious topic of the revelatory nature of designing your own action figure...

So yeah, I did it too. They got so close on the Capitals' jersey (as long as you don't look too close). I think its a deceptively subversive little thing and maybe that's just me but I had a couple of thoughts. :-) First, you have to think of your title - but its a title free from job descriptions, roles and responsibilities, and org structures. Maybe that means these titles can shed a little more truth on how we see ourselves. Then we get to describe our own super powers and we even get to pick out our accessories. It really reminds me of when Second Life was big and everyone was talking about your avatar could be anything and I was thinking yeah, no physical boundaries, no economic boundaries, we can mold our avatars into however we want ourselves to be seen. That's kinda cool. :-)

Decentralized platform is letting users own a piece of the AI models trained on their data: I can’t love this idea enough. If this problem gets cracked and at scale, think about a consumer-side agent, trusted to have your personal interests at heart, negotiating on your behalf for other AI models to use your data. Just brilliant. > > “The decentralized platform Vana, which started as a class project at MIT, is on a mission to give power back to the users. The company has created a fully user-owned network that allows individuals to upload their data and govern how they are used. AI developers can pitch users on ideas for new models, and if the users agree to contribute their data for training, they get proportional ownership in the models.”

OpenAI Academy: Have you even signed up yet?

Microsoft AI Skills Fest: Fine, I’ll ask again- have you signed up yet?

Don’t believe reasoning models’ Chains of Thought, says Anthropic: Yes, you’re right - I will use this super interesting article to say that this is another place that L&D could be leading. As these developments come out, and they come out near daily, L&D teams could be hosting a series of roundtables with SMEs, developers, and senior leaders to help make sense of them. Include Sales, GTM, Marketing, et al but be seen as leading the conversation and raising the AI literacy bar across the org > > From an Anthropic blog post > > “There’s no specific reason why the reported Chain-of-Thought must accurately reflect the true reasoning process; there might even be circumstances where a model actively hides aspects of its thought process from the user.” See also > > CRA and Microsoft Launch New Fellowship to Advance Trustworthy AI Research.

SchoolAI Raises $25M in Series A Funding: Let’s leave aside for the moment if you think this is a good or bad development. What it is though is an upstream signal of a generation that will arrive at your company with an expectation around AI. > > “SchoolAI provides a classroom experience platform that combines AI assistants for teachers that help with classroom preparation and other administrative work, and Spaces–personalized AI tutors, games, and lessons that can adapt to each student’s unique learning style and interests. Together, these tools give teachers actionable insights into how students are doing, and how the teacher can deliver targeted support when it matters most.”

Vimeo CEO says he wasn’t allowed to use adverbs when he was working at Amazon—here’s why he thinks it helps companies to not ‘lose their way’: I don’t have a subscription so I can’t share great insights from the article but as a former Amazonian, I can tell you this is true. When you write everything (No PPT at Amazon), you’re forced to be deeply mindful of your language and that vocabulary does not include flowery language. Stick to the story and the data.

ADVANCES AND CHALLENGES IN FOUNDATION AGENTS FROM BRAIN-INSPIRED INTELLIGENCE TO EVOLUTIONARY, COLLABORATIVE, AND SAFE SYSTEMS: Buckle up folks - this one is a 264 page doozy. Actually doozy is incorrect - I meant to say this may be the most comprehensive examination of agents and agentic architecture out currently. From the abstract > > This survey provides a comprehensive overview, framing intelligent agents within a modular, brain-inspired architecture that integrates principles from cognitive science, neuroscience, and computational research. We structure our exploration into four interconnected parts.

First, we delve into the modular foundation of intelligent agents, systematically mapping their cognitive, perceptual, and operational modules onto analogous human brain functionalities, and elucidating core components such as memory, world modeling, reward processing, and emotion-like systems.

Second, we discuss self-enhancement and adaptive evolution mechanisms, exploring how agents autonomously refine their capabilities, adapt to dynamic environments, and achieve continual learning through automated optimization paradigms, including emerging AutoML and LLM-driven optimization strategies.

Third, we examine collaborative and evolutionary multi-agent systems, investigating the collective intelligence emerging from agent interactions, cooperation, and societal structures, highlighting parallels to human social dynamics.

Finally, we address the critical imperative of building safe, secure, and beneficial AI systems, emphasizing intrinsic and extrinsic security threats, ethical alignment, robustness, and practical mitigation strategies necessary for trustworthy real-world deployment.

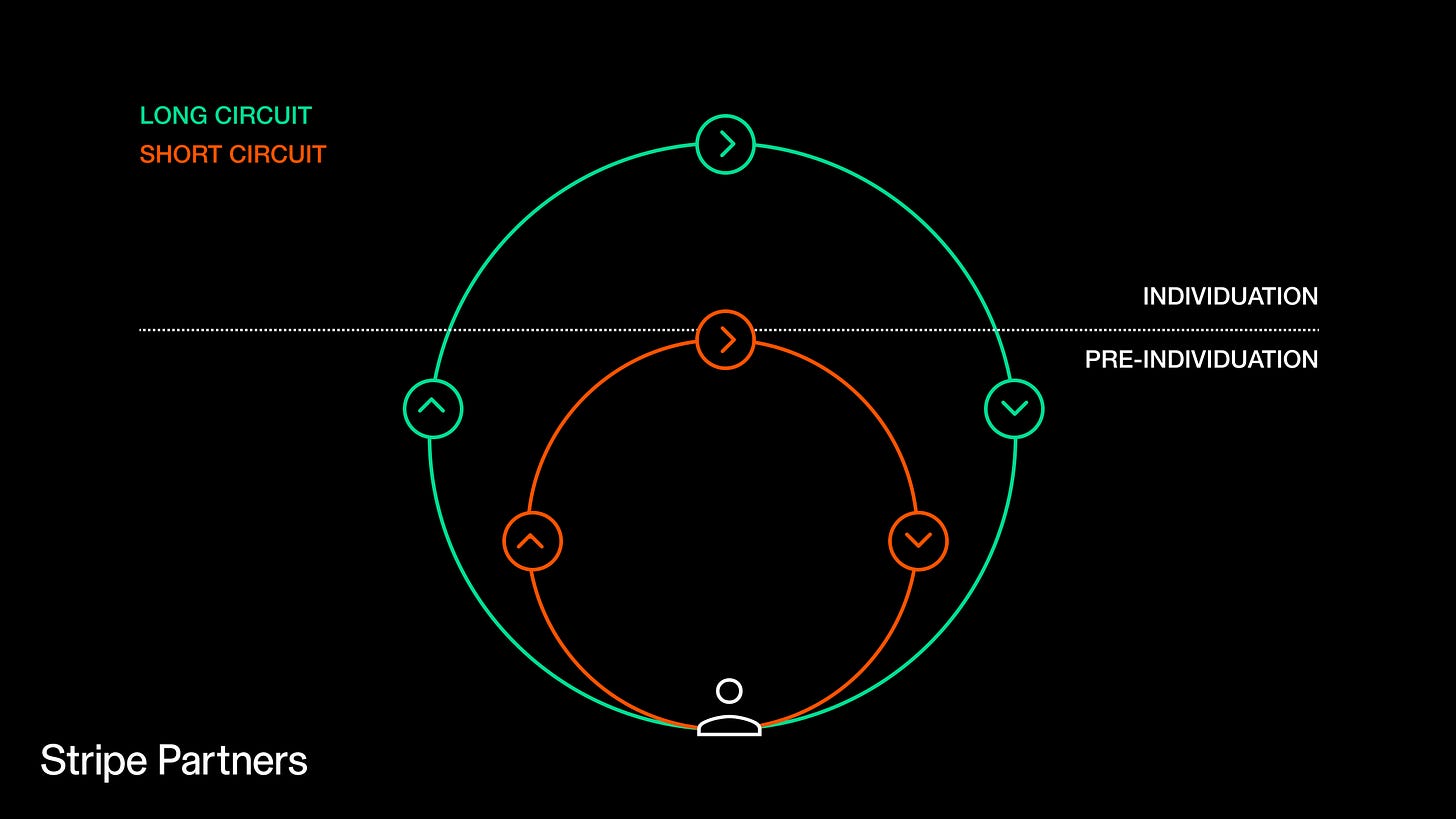

Using Bernard Stiegler’s pharmacological approach to heal from digital fatigue (via Frames): So I wouldn’t expect companies that have business models based on engagement to follow this model but wowza could #LearningAndDevelopment teams benefit from this approach. The image below (thank you Stripe Partners) shows a “long circuit” which includes time for reflection and for us to use technology in a way that allows for “individuation.” These long circuits (think learning an instrument) “require attention, participation, and reflection—and often give people a sense of meaning and purpose.” This is as opposed to “short circuits” which deliver that engagement hit of dopamine prior to “individuation” being able to take place. That’s how so much of current social media operates. It’s also how we build a lot of our training content. We act like that one hit is sufficient (looking at you compliance training). Maybe we need to be looking at ways that we can long circuits for our learners instead of the quick hit of the “next” button and the release of the “print certificate” command.

63% of employers say skill gaps are the biggest hurdles to AI adoption: “While 97% of executives believe AI will fundamentally reshape their industries, 65% also admit they lack the technological expertise to lead these transformations effectively.” > > Golly. If there were only some kind of organization that dealt with learning and such that could help those execs close that skills gap. Think about how those orgs would be viewed. Valuable I bet. See also > > The Great Skill Shift: How AI Is Transforming 70% Of Jobs By 2030: And I bet this passage might catch the eye of Marc Steven Ramos “AI is about to make this broken system unsustainable. Why? Because AI forces us to think about jobs not as titles but as collections of tasks requiring specific skills. And as tasks change, we need a clearer understanding of the skills people have and the skills jobs require.”

How to Use NotebookLM’s New Discover Sources Tool for Faster Research: I already really liked NotebookLM > > “Google Labs has introduced “Discover sources,” a new feature in NotebookLM designed to streamline the process of exploring and analyzing topics. This feature curates relevant sources from reputable websites, enabling users to quickly gather information and start projects more efficiently. Additionally, a new “I’m Feeling Curious” button allows users to explore random topics, showcasing the tool’s capabilities.”

The tool integration problem that’s holding back enterprise AI (and how CoTools solves it): Yes, it’s a little geeky but so am I. I actually think this falls squarely in the bucket of things that will speed enterprise adoption > > “Researchers from the Soochow University of China have introduced Chain-of-Tools (CoTools), a novel framework designed to enhance how large language models (LLMs) use external tools. CoTools aims to provide a more efficient and flexible approach compared to existing methods. This will allow LLMs to leverage vast toolsets directly within their reasoning process, including ones they haven’t explicitly been trained on.”

OpenAI plans to make Deep Research free on ChatGPT, in response to competition: I’m including this as another signal that you and your org need to be experimenting. That means coming up with a hypothesis and testing it. The ability of reasoning models to do research like this is a feature development that people can see immediate ROI on….BUT…only if they can trust it. Set those conditions. Test. Examine results.

Anthropic launches an AI chatbot plan for colleges and universities: Two things here - #1 another upstream signal of learner expectations being raised and #2 read that second part where Claude will be asking questions to test knowledge. Goosebumps? Shivers? > > “The new tier is aimed at higher education, and gives students, faculty, and other staff access to Anthropic’s AI chatbot, Claude, with a few additional capabilities. One piece of Claude for Education is “Learning Mode,” a new feature within Claude Projects to help students develop their own critical thinking skills, rather than simply obtain answers to questions. With Learning Mode enabled, Claude will ask questions to test understanding, highlight fundamental principles behind specific problems, and provide potentially useful templates for research papers, outlines, and study guides.”