Seems relevant to the “we don’t actually know how LLMs are reasoning/thinking/deciding” discussion….

Tyger Tyger, burning bright,

In the forests of the night;

What immortal hand or eye,

Could frame thy fearful symmetry?

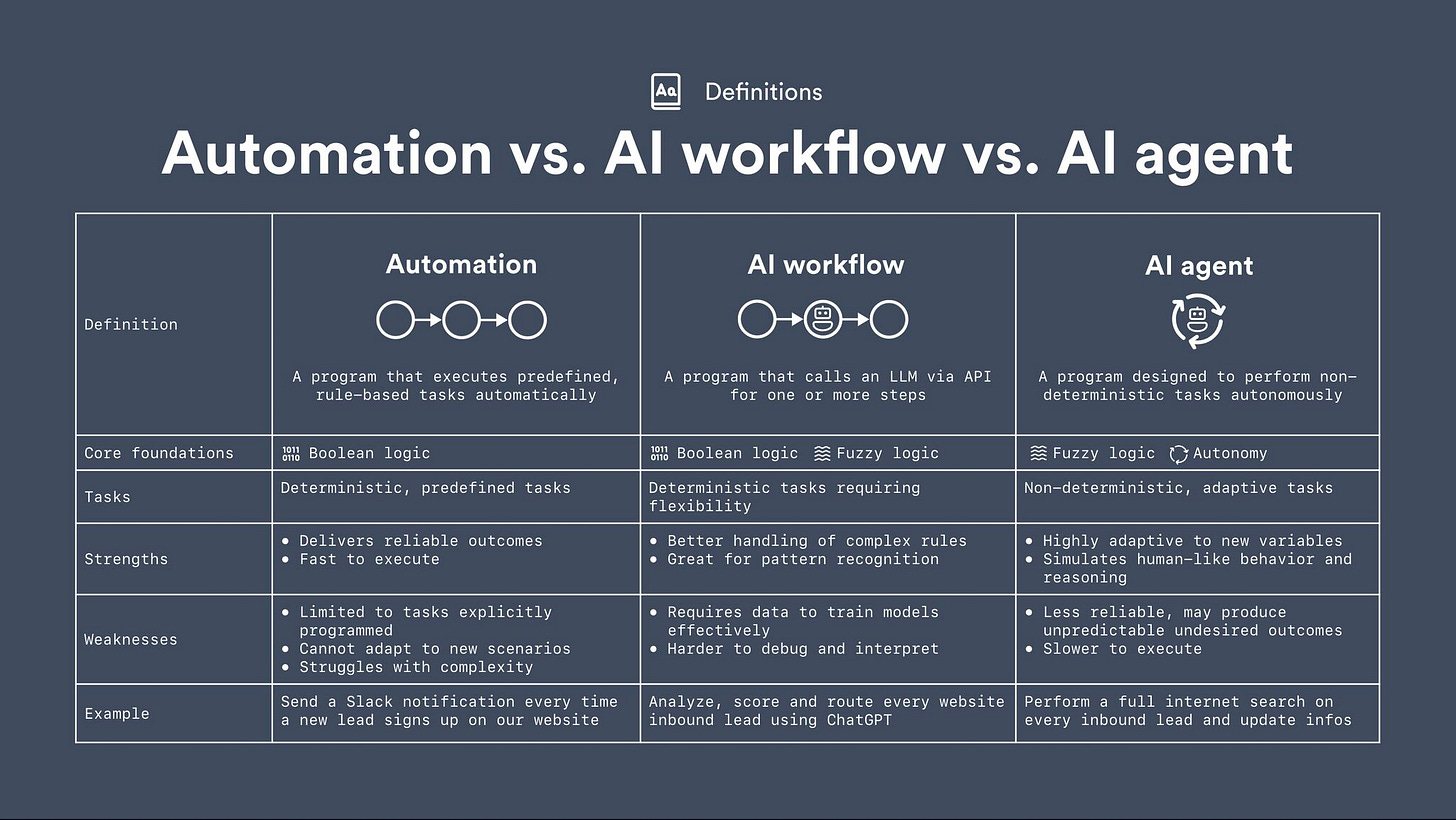

Automation vs AI Workflow vs AI Agent: *Cautionary note before reading further. > > We NEED models right now. We need text descriptions of models and we need diagrams of models. They are guideposts in our journey to understand this AI-enabled world, which like it or not, we love in. THAT BEING SAID > > we must remain critical of and question the models that people put forward. Not on a personal level but that’s how rigorous inquiry works. The LAST thing we need to do now is adopt some model as received wisdom, act like its immutable and build entire business models around it. I’ve seen it happen in L&D and that dynamic only serves to quash experimentation and innovation. That being said, read on > > Alexandre here is someone you should probably follow on LinkedIn. He created the image below (link take you to the LI post). The image also gives me a chance to make the point again that this is the kind of education and training that #LearningAndDevelopment needs to be working on now. This is the help that is needed across your org to raise the bar on AI literacy.

From one to many: AI agents and the hyper scaling of human thought – my view leading Microsoft HR’s AI strategy: Lots of things make this an interesting read - its MSFT, its HR at MSFT, and its written by Christopher J. Fernandez, Corporate Vice President, Human Resources at Microsoft - so someone who could speak with some authority on the topic. Couple this with Ross Dawson’s review of Microsoft’s annual Work Trend Index and you’ve got some great reading. And You should read both but here is my quibble - while we’re talking about agents as team members, and AI filling capacity gaps - what I’m not seeing (and please, if you are, point me in its direction) are discussions on how the underlying structures of performance review and pay will change - or how they should change to optimize outcomes for orgs and employees. We need to be having discussions around the 2nd and 3rd order effects that will flow from these changes. Just to be clear, 2nd and 3rd order effects by no means have to be smaller in effect size that 1st order changes - they are just less predictable. We need more clarity there as well. See also: AI Agents And The Hybrid Organization: 3 Insights From Microsoft.

Character.AI unveils AvatarFX, an AI video model to create lifelike chatbots: Dear Instructional Designers and #LearningAndDevelopment teams, PLEASE don’t get distracted by this. Plain text that’s timely, correct, and on point, will be soo much more useful to people than talking avatars (not in all situations but most).

Is your AI product actually working? How to develop the right metric system: Talk about a critical skill that AI won’t soon replace - developing metrics to understand if the AI is producing what you need it to. Now there is a distinction there - I can’t find the original citation but Edward Deming is reported as saying that "every system is perfectly designed to get the results it gets." So when you start deploying AI, develop your metrics for success first, away from the tech. Decide on the results you want to get and focus there - otherwise the system will produce the outcomes it was designed to and those may not be the ones you need > > “Additionally, if you do not actively define the metrics, your team will identify their own back-up metrics. The risk of having multiple flavors of an ‘accuracy’ or ‘quality’ metric is that everyone will develop their own version, leading to a scenario where you might not all be working toward the same outcome.”

Ray-Ban Meta Glasses are my favorite AI gadget, and they keep getting better: I’ve always wanted AI-enabled glasses that just bring AR right to you. The only thing I want now are glasses that are built independent of a company that’s trying to sell you other things. I really don’t my glasses becoming just another ad surface. > > “A year later, I can now ask Meta AI what I’m looking at to recognize iconic buildings while strolling through the streets of Amsterdam or video call my girlfriend on WhatsApp and show her the Eiffel Tower from my perspective.” See also (I mean just imagine working in tight quarters with a 100 inch screen) > > Spacetop AR is now an expensive Windows app instead of a useless screenless laptop.

Despite positive reviews, indie dev declares their early access roguelike "fails in fundamental ways," makes it free forever, and moves the heck on: I can’t love this story enough. Want to see courage in a builder? Want to see someone who embodies what we used to call vocally self-critical? This person did what so many others in so many orgs have failed to do - stop putting effort into something that’s not working and explain why. Huge kudos to Emerick Gibson. > > “One indie developer has made their early access roguelike game entirely free and moved on from the project after declaring it "fails in fundamental ways that made it hard to fix.”

Looking Beyond the Hype: Understanding the Effects of AI on Learning: Remember what I said about needing models earlier? > > “Building on this foundation, we introduce the ISAR model, which differentiates four types of AI effects on learning compared to learning conditions without AI, namely inversion, substitution, augmentation, and redefinition.”

Designing AI to Think With Us, Not For Us: OK - wow - what a a great read > > “The Problem-Solution Symbiosis framework and toolkit extends, rather than displaces, human cognition, including tools for envisioning, problem (re)framing and selection, interdisciplinary collaboration, and the alignment of stakeholder needs with the strengths of a genAI system. Applying the toolkit helps us guide the development of useful, desirable genAI by building intuition about system capabilities, developing a systemic understanding of emerging problem spaces, and using a matrix to identify if and when to offload tasks to the system.”

Future of Learning? (by Julian Stodd): I mean, obviously read everything that Julian writes but this part got me “Of course as with everything that humans create, it is held within human context, and in this case it is contexts of power, knowledge, influence, control, law, religion, and so on. Knowledge is controlled, and hence so too is learning.” AI makes context so important.

The Creativity Issue (MIT Technology Review): Feels timely > > “Defining creativity in the Age of AI: Meet the artists, musicians, composers, and architects exploring productive ways to collaborate with the now ubiquitous technology.”

SWiRL: The business case for AI that thinks like your best problem-solvers: Another step and another piece of content for L&D to teach to the rest of the org > > “LLMs trained via traditional methods typically struggle with multi-step planning and tool integration, meaning that they have difficulty performing tasks that require retrieving and synthesizing documents from multiple sources (e.g., writing a business report) or multiple steps of reasoning and arithmetic calculation (e.g., preparing a financial summary),” they told VentureBeat.”

A new, open source text-to-speech model called Dia has arrived to challenge ElevenLabs, OpenAI and more: When I say things like AI can help companies punch above their weight, this is what I mean > > “A two-person startup by the name of Nari Labs has introduced Dia, a 1.6 billion parameter text-to-speech (TTS) model designed to produce naturalistic dialogue directly from text prompts — and one of its creators claims it surpasses the performance of competing proprietary offerings from the likes of ElevenLabs, Google’s hit NotebookLM AI podcast generation product.” > > 2 person startup.

Being Human in 2035: Experts predict significant change in the ways humans think, feel, act and relate to one another in the Age of AI: Look, each one of these experts is probably smarter and more qualified to make these calls so I’ll take their thoughts as directional indicators vs a lock on the way too clean, decade timeline. Still some very interesting reading and I’m always in favor of taking big swings.

Relyance AI builds ‘x-ray vision’ for company data: Cuts AI compliance time by 80% while solving trust crisis: This might not seem sexy at first glance but this is the kind of work that will make the rest of it sing. > > “The company’s new Data Journeys platform, announced today, addresses a critical blind spot for organizations implementing AI — tracking not just where data resides, but how and why it’s being used across applications, cloud services, and third-party systems.”

Famed AI researcher launches controversial startup to replace all human workers everywhere: Sometimes smart people just do and say the stupidest stuff

The EPOCH of AI: Human-Machine Complementarities at Work: Worth a read > > “In this paper, we study the impact of AI and emerging technologies on the American labor force by exploring AI's potential for substitution and complementarity with human workers. We ask, "What human capabilities complement AI's shortcomings?". By focusing on human capabilities rather than AI's current capabilities, we shift the narrative from substitution-driven to a more complementary, human-centered approach.”

Fossilized Cognition and the Strange Intelligence of AI: Interesting read but I love these lines > >

“LLMs don’t think—they echo fossilized patterns of human buried in time.

Their intelligence warps through curved semantic space, not lived experience.

We risk mistaking reflection for sentience—and losing ourselves in the mirror.”

Self-Organizing Graph Reasoning Evolves into a Critical State for Continuous Discovery Through Structural-Semantic Dynamics: “We report fundamental insights into how agentic graph reasoning systems spontaneously evolve toward a critical state that sustains continuous semantic discovery. By rigorously analyzing structural (Von Neumann graph entropy) and semantic (embedding) entropy, we identify a subtle yet robust regime in which semantic entropy persistently dominates over structural entropy. This interplay is quantified by a dimensionless Critical Discovery Parameter that stabilizes at a small negative value, indicating a consistent excess of semantic entropy….Our findings provide interdisciplinary insights and practical strategies for engineering intelligent systems with intrinsic capacities for long-term discovery and adaptation, and offer insights into how model training strategies can be developed that reinforce critical discovery.”