Weekly Link Roundup #52

We're in Week 7 out of 52 for 2025. Still time to fire up those Resolutions. :)

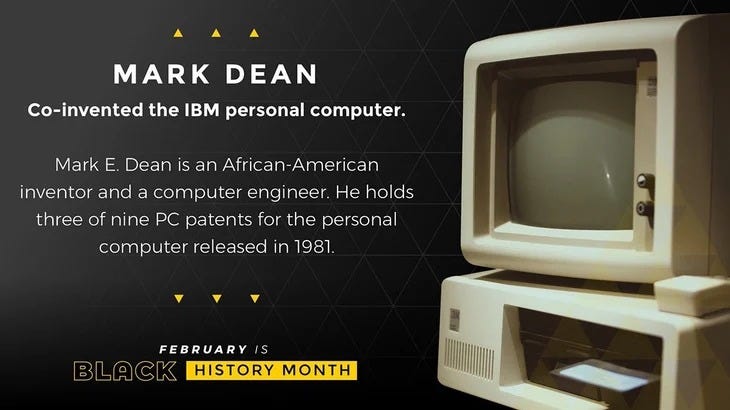

Ten Black Scientists That Science Teachers Should Know About: “Helping your students see the possibilities of careers in STEM fields means providing them with new role models. Black History Month offers teachers more opportunities to feature the contributions of Black scientists, engineers, and mathematicians in the context of their classroom instruction. We have made a list of some Black scientists, engineers, inventors, and mathematicians who have and are still making impacts, along with media-based teaching resources to help you bring their work — and stories — into your classroom.”

PromptLayer is building tools to put non-techies in the driver’s seat of AI app development: So a) this is a great idea and b) feels like the exit for this company will be an acquisition and c) the really interesting part here is a lack of imagination that I’ve seen so far on how to manage this kind of development. > > “Zoneraich says the core of PromptLayer’s product is a “prompt registry”. “It’s CMS, it’s version control for prompts,” he explains. “You have a prompt, you create a new version, you could see why versions are different, and then you could choose which version’s your production version … That’s like the center of our product — and everything kind of expands out from that and tries to make that more useful.” Just wondering if any org out there has come up with policies, not so much to govern the use of AI internally, but how to reward and guide those who are particularly good at it? I know that “prompt engineer” was a job title that had a half life of milk left on the counter but what happens when everyone across the org can build not only prompts but apps and GPTs? What happens when Larry in Finance or Jennifer in Marketing are building these productivity tools? Has your CISO stopped sleeping at night thinking about it? Does your Recruiting team know how to best write job descriptions to find this kind of talent? See this too for another player in the application layer for AI deployments: Composo helps enterprises monitor how well AI apps work. This one too: Cognida.ai raises $15M to fix enterprise AI’s biggest bottleneck: deployment.

Lyft uses Anthropic's Claude chatbot to handle customer complaints: OK. Read this quote slowly and then see me on the other side. “If you're a frequent Lyft rider, you can see the early results of that collaboration when you go through the company's customer care AI assistant, which features integration with Anthrophic's Claude chatbot. According to Lyft, the tool is already helping to resolve thousands of driver issues every day, and has reduced average resolution times by 87 percent.” > > 87% reduction time in ticket resolution time. What do you think will happen when someone deploys AI and reduces the average cost to produce typical learning/training content by 87%? What do you think the CFO will recommend? What do you think will happen to headcount if the L&D function doesn’t find another way to demonstrate value as opposed to the activity measurements in place now.

AI-Native Companies Are Growing Fast and Doing Things Differently (gift link): “AI native companies have a wide range of characteristics, but they all tend to see AI as more than a tool to increase productivity or drive a particular return on investment. It is a way for them to replace structured processes, which have been at the heart of the corporation throughout the industrial era, with fast, powerful, artificial intelligence and reasoning.” > > First, I hate the whole “native” trope. Second, I feel like all this talk about how these firms are doing things differently should have “to a degree” added to any description. Yay new tech. Yay getting rid of old processes. What’s that? All that change still sits on top of 800 year old double entry bookkeeping? Oh yeah. You can’t have fundamental change without fundamental change.

DeepSeek’s R1 and OpenAI’s Deep Research just redefined AI — RAG, distillation, and custom models will never be the same: When innovation gets talked about from a functional, organizational standpoint, it can be thought of as either a separate ‘skunkworks’ or a org-wide capability. Usually I’m in favor of the org-wide capability model - much like with L&D, I think innovation as a practice has the best chance for success when we teach everyone how to do it. Regarding AI, I feel a little different. According tot he article, OpenAI hallucinates about 8% of the time and DeepSeek is around 14%. Now you pick which 8-14% of the decisions you need to make, you’re ok with being wrong. Or the 8-14% of answers you give to your customers, you’re OK with being bad information. We don’t get all the answers right now but AI can get things wrong confidently and at scale and speed.

Generative AI in the Real World: Measuring Skills with Kian Katanforoosh: Could be a key use in the L&D realm especially as the required skills will be rapidly changing for the foreseeable future. It always makes me think about games though. On one level, games are just feedback loops - skills assessments if you will. I wonder if instead of thinking about leaderboards or badges, there are other, more important lessons we could learn from game design. > > “How do we measure skills in an age of AI? That question has an effect on everything from hiring to productive teamwork. Join Kian Katanforoosh, founder and CEO of Workera, and Ben Lorica for a discussion of how we can use AI to assess skills more effectively. How do we get beyond pass/fail exams to true measures of a person’s ability?”

Hundreds of rigged votes can skew AI model rankings on Chatbot Arena, study finds: And we’re shocked when LLMs model human bias? > > “But Pang and his colleagues identified that it’s possible to sway the ranking position of models with just a few hundred votes. “We just need to take hundreds of new votes to improve a single ranking position,” he says. “The technique is very simple.”

Researchers created an open rival to OpenAI’s o1 ‘reasoning’ model for under $50: I’ve said it before, Deep Seek’s most important contribution to date (and maybe ever) has been showing people the playbook for the disruption of cost around model development.

The hottest new idea in AI? Chatbots that look like they think:

Bear with me on this one. There was this post on LinkedIn, that summarized this paper, The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers. The article and summary point out some interesting impacts on critical thinking among knowledge workers using GenAI. All that is interesting but then I see this article on how more and more AI models are showing their reasoning along with their answers. That’s interesting but I wonder what impact that development will have on the critical thinking skills mentioned in the first two pieces? I mean what a day it would be if #LearningAndDevelopment was called upon to develop content to teach an org about critical thinking skills? See also: Google’s Gemini app adds access to ‘thinking’ AI models > >

Now more than ever, AI needs a governance framework : From Fei-Fei Ling, the godmother of AI: Her three fundamental principles for the future of AI policymaking:

“First, use science, not science fiction. The foundation of scientific work is the principled reliance on empirical data and rigorous research.

Second, be pragmatic, rather than ideological. Despite its rapid progression, the field of AI is still in its infancy, with its greatest contributions ahead.

Finally, empower the AI ecosystem. The technology can inspire students, help us care for our ageing population and innovate solutions for cleaner energy — and the best innovations come about through collaboration.”

Nearly 500K students across California get access to ChatGPT: Remember when students' experience with the Web resulted in this huge expectation gap when they moved into the corporate world and met enterprise software? What do you think will happen now? “The California State University (CSU) system is introducing OpenAI's ChatGPT Edu — a version of ChatGPT customized for educational institutions — to more than 460,000 students and over 63,000 staff and faculty across its 23 campuses.”

EU lays out guidelines on misuse of AI by employers, websites and police: I think you’re gonna need some global help deploying AI > > “Employers will be banned from using artificial intelligence to track their staff's emotions and websites will not be allowed to use it to trick users into spending money under EU AI guidelines announced on Tuesday.” See also: AI regulation around the world.

DSTLRY, the comic books marketplace, launches new customization features for artists: What? You’re not all comic book nerds like me? :-) Well anyway, I’m interested in this for two other reasons. The first being that business model innovations are always interesting especially in such tight margin industries as comic books. The second is that I am still very interested in how we explore and innovate with regard to internal creators and how we can incentivize internal innovation.

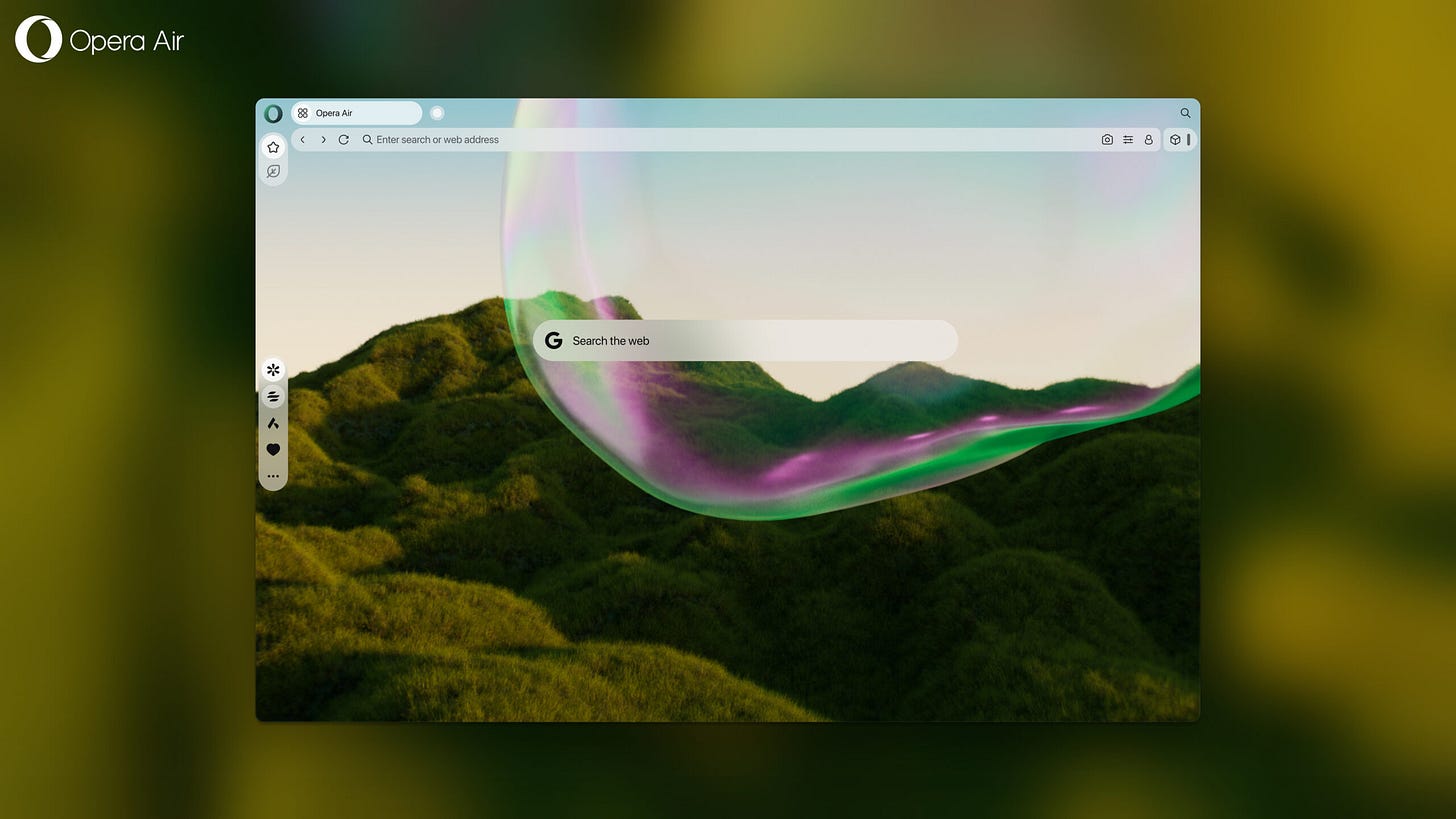

Opera’s new browser might save you from doomscrolling: I was actually an early, paying customer of Opera. I still think that innovations in browsers are important since they are, more than ever, our primary online windows. I saw browser tabs first in Opera and a number of other innovations that are now standard across the market.

Tana snaps up $25M as its AI-powered knowledge graph for work racks up a 160K+ waitlist: I know this is coming in late in the newsletter but do not sleep on any company or product that is adding a knowledge graph to an AI deployment. “Everything that you do, whether it’s talking to your phone or having a meeting or writing your own notes, it is all automatically organized and connected together so that our AI can work,” Vassbotn said.”