How AI can help Indigenous language revitalization, and why data sovereignty is important: I can’t love this story enough. To be clear, I think efforts to use AI to save, maintain and reintroduce Indigenous languages to be one of the highest uses the tech can be put to. As notes in the story, this single invention won’t save the language - much more consistent and ongoing work will have to be done to get an model sufficiently trained but its a start. > > “There are limitations, Running Wolf said, like sparse data and the polysynthetic nature of many Indigenous languages. An efficient AI, for example, can take 50,000 hours of English to create automatic speech recognition. Most Indigenous languages have so few speakers there is insufficient data to train AI, he said, and AI cannot recognize or understand things it's never seen before and requires information to replicate.”

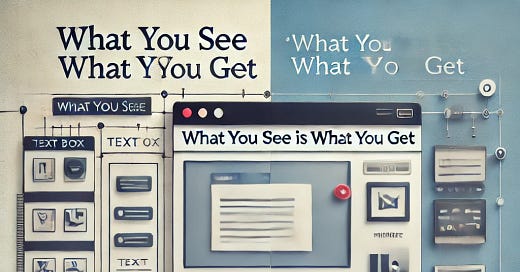

Of Modes and Men - Cut-and-paste, the one-button mouse, WYSIWIG desktop publishing—these are just a few of the user interface innovations pioneered by Larry Tesler: This is my favorite part - “Larry generated a lot of the basic ideas for the work we were doing,” says Douglas Fairbairn, a former colleague at PARC. “But he doesn’t have a big ego, so his name didn’t get attached to things. He wasn’t the one guy who did one big thing you’ll remember him for; he was a collaborator on many things.” > > We need more Larry Teslers.

Sakana AI’s ‘AI Scientist’ conducts research autonomously, challenging scientific norms: OK, first off let’s agree there’s a lot more to test here and nothing ever works like it does in the pitch and I need to actually read the paper its based on. That being said, if this gets us further down the road faster, we not only have to think about human-in-the-loop validation and verification, but about business models and what the heck, throw in manufacturing models as well > > “The AI Scientist automates the entire research lifecycle, from generating novel ideas to writing full scientific manuscripts. “We propose and run a fully AI-driven system for automated scientific discovery, applied to machine learning research,” the team reports in their newly released paper.” See also: That Amazing ‘The AI Scientist’ Agentic AI Strives To Fully Automate The Scientific Process But Watch Out For These Ferocious Gotchas:

The Autonomous Instructional Designer Has Arrived: Arist Creator: I’m putting this one right under the Sakana story since I think you have to take both with a large grain of salt. One caveat right off the top is the need for clean data. You just don’t have some intake valve for documentation, shove manuals into it, and have a course pop out. Also want to know what other data is in the training set. How is the language translation validated? Who is going to set it up? Do I hear echoes of people training their replacements? Lots of questions here and while I respect Josh a lot, this review reads a little too breathless and uncritical for me. > > “AI Creator can ingest more than 5,000 pages of documentation, translate content into 50+ languages, and generate comprehensive instructional courses (in the Arist format and in PowerPoint) with the rigor needed for even a pharmaceutical company.”

Study explores the transformation of educational system with the advent of AI: I include this article not to bash the article, I’m sure the author is well meaning and the exploration of how AI will impact the education system is needed; however, I have a HUGE problem with talk of the “system". We really need to talk about 2 systems - the instructional model - how teaching is conducted and the business or funding model - how the money happens. My argument is that you can change the former but if the latter stays the same, the degree to which you will be able to change anything will be severely limited. The U.S. funding model of largely relying on property taxes is out of date and in need of massive overhaul. It’s led to gross inequities in the quality of educational opportunities between rich and poor districts and between urban, suburban, and rural districts. Until those issues are addressed, we will continue re-arranging deck chairs on an antiquated funding model and which affects everything from teacher salaries to building budgets. So AI might change some things but let’s not kid ourselves that it will change the “system.”

Sarvam AI Launches India’s First Open Source Foundational Model in 10 Indic Languages: Strong signal of national models of AI > > “The startup, which raised $41 million last year from the likes of Lightspeed, Peak XV Partners and Khosla Ventures, believes in the concept of sovereign AI- creating AI models tailored to address the specific needs and unique use cases of their country.”

California AI bill SB 1047 aims to prevent AI disasters, but Silicon Valley warns it will cause one: In large measure, this is a Choose Your Own Adventure game except you choose which experts you believe are right. For me, I’m going to side with Meta’s chief AI scientist, Yann LeCun, who argues that the bill goes too far in regulating R&D in addition to applications are asserts that the bill is based on “an “illusion of ‘existential risk’ pushed by a handful of delusional think-tanks.” See also: Pelosi Statement in Opposition to California Senate Bill 1047.

Piecing Together an Ancient Epic Was Slow Work. Until A.I. Got Involved: (gift link) This is pretty cool, to think we might actually get the full Gilgamesh story one day - “Now, an artificial intelligence project called Fragmentarium is helping to fill some of these gaps. Led by Enrique Jiménez, a professor at the Institute of Assyriology of the Ludwig Maximilian University of Munich, the Fragmentarium team uses machine learning to piece together digitized tablet fragments at a much faster pace than a human Assyriologist can. So far, A.I. has helped researchers discover new segments of Gilgamesh as well as hundreds of missing words and lines from other works.”

CrowdStrike Exec Shows Up to Accept 'Most Epic Fail' Award in Person: Actually mad respect for this. “Definitely not the award to be proud of receiving,” he told the audience. “I think the team was surprised when I said straight away that I would come and get it because we got this horribly wrong. We’ve said that a number of different times. And it’s super important to own it.”

So long, point-and-click: How generative AI will redefine the user interface: I never really believed in voice (alexa, siri, etc) as the “new interface” but I do think conversational UI has the potential to change the face of enterprise software.

Can AI add value to medical education and improve communication between physicians and patients?: The headline is misleading as the article is really about one study that used ChatGPT4.0 to assess if it could provide accurate diagnosis. Shocker, it’s only about 50% effective. Of course it is, it’s only broadly trained and medical work demands specificity and specialized training sets. > > Do better TechXplore.

Researchers Have Ranked AI Models Based on Risk—and Found a Wild Range: Biggest shocker of the article (tried to go heavy on the irony there) “The analysis also suggests that some companies could do more to ensure their models are safe. “If you test some models against a company’s own policies, they are not necessarily compliant,” Bo says. “This means there is a lot of room for them to improve.”

73% of organizations are embracing gen AI, but far fewer are assessing risks: Just a whole bunch of shocking/not shocking news this week > > “However, only 58% of respondents have started assessing AI risks. For PwC, responsible AI relates to value, safety and trust and should be part of a company’s risk management processes.” > > Risk assessment would have to include a clear understanding of the AI systems to be implemented and how it would access other systems, the impact of data and its cleanliness, on and on. I’d like to see the risk assessments of those 58% and what they’re looking at.

Epic judge says he’ll ‘tear the barriers down’ on Google’s app store monopoly: In a little under two weeks, the technology landscape could have a tectonic shift > > “We’re going to tear the barriers down, it’s just the way it’s going to happen,” said Donato. “The world that exists today is the product of monopolistic conduct. That world is changing.”

Artists claim “big” win in copyright suit fighting AI image generators: “In an order on Monday, US District Judge William Orrick denied key parts of motions to dismiss from Stability AI, Midjourney, Runway AI, and DeviantArt. The court will now allow artists to proceed with discovery on claims that AI image generators relying on Stable Diffusion violate both the Copyright Act and the Lanham Act, which protects artists from commercial misuse of their names and unique styles.”