Weekly Link Roundup #27

Whew. There's a lot in there and a lot to think about past the new features in ChatGPT X.0

Wow. Could those two headlines make it any clearer? You have a choice - figure out what those “value-added” tasks look like and start doing them OR get relegated down the value chain. Almost like that’s the exact dynamic that started this newsletter: Why don't you just buy a refrigerator?

Lynn Conway, a true pioneer, passes away at 86: “Former IBM and Xerox PARC engineer Lynn Conway, who helped shape the way chips are designed, died last weekend at age 86. The big picture: Conway broke ground both for her contributions to the tech industry and for her gender transition at a time when such a move was rare among professionals.” > > She announced her plans to transition and was fired by IBM in 1968 (IBM apologized in 2020).

Let Readers Read: “We purchase and acquire books—yes, physical, paper books—and make them available for one person at a time to check out and read online. This work is important for readers and authors alike, as many younger and low-income readers can only read if books are free to borrow, and many authors’ books will only be discovered or preserved through the work of librarians. We use industry-standard technology to prevent our books from being downloaded and redistributed—the same technology used by corporate publishers. But the publishers suing our library say we shouldn’t be allowed to lend the books we own. They have forced us to remove more than half a million books from our library, and that’s why we are appealing.” As an anthropologist and historian, I can’t agree with this enough.

Gen AI and Gen Alpha: The impacts of growing up in an innovation cycle: LOVE this article for a couple of reasons. First, I think it points out a much more nuanced view of how generations grow up with and adapt to technology than say The Anxious Generation (which I think has many flaws). I would say that the article’s generations are a bit off - Gen X grew up with the birth of the Internet, Millennials grew up with the Web, and so on. This is spot on “Right now, members of Generation Alpha are growing up alongside the evolution of generative AI. Its near-instant outputs generated in text and visual formats, even through the simplest prompts like “write a toast I can make at an upcoming wedding” are profound.” And if you want a 3rd reason to read the article - think about this - these kids growing up with AI, are your next cohort of employees and they will bring those experiences and expectations with them.

A social app for creatives, Cara grew from 40k to 650k users in a week because artists are fed up with Meta’s AI policies: I’d frame this as an interesting but currently weak signal. It will get stronger if more a site like Cara can be a success and creates a model for other sites to follow with other artist populations. > > “Artists have finally had enough with Meta’s predatory AI policies, but Meta’s loss is Cara’s gain. An artist-run, anti-AI social platform, Cara has grown from 40,000 to 650,000 users within the last week, catapulting it to the top of the App Store charts.” WIRED interview with the CARA founder.

Black founders are creating tailored ChatGPTs for a more personalized experience: Look for cultural nuance and language localization to be differentiators in the LLM space. “ChatGPT, one of the world’s most powerful artificial intelligence tools, struggles with cultural nuance. That’s quite annoying for a Black person like Pasmore. In fact, this oversight has evoked the ire of many Black people who already did not see themselves properly represented in the algorithms touted to one day save the world. The current ChatGPT offers answers that are too generalized for specific questions that cater to certain communities, as its training appears Eurocentric and Western in its bias. This is not unique — most AI models are not built with people of color in mind. But many Black founders are adamant not to be left behind. Numerous Black-owned chatbots and ChatGPT versions have popped up in the past year to cater specifically to Black and brown communities, as Black founders, like Pasmore, seek to capitalize on OpenAI’s cultural slip.”

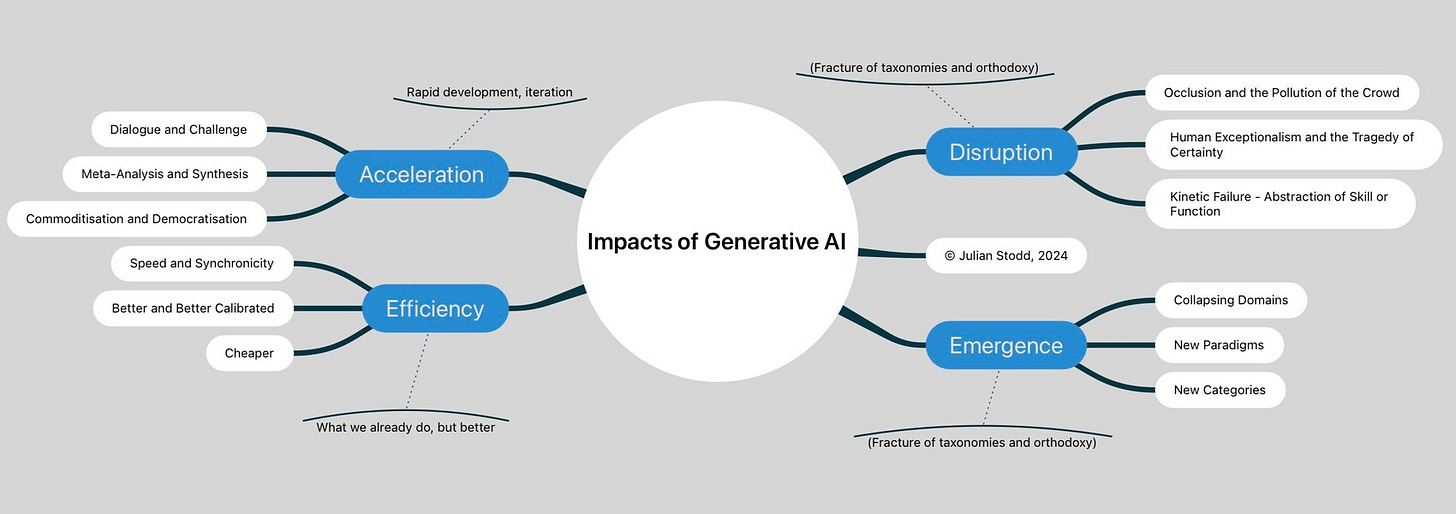

Generative AI - Four Areas of Impact: Typically, thoughtful work from Julian Stodd. This really resonates with me because I think it works at the best level for us to be thinking on right now. While we can be (and should) be experimenting with current AI functionality, the most fruitful work will be at the level of re-imagining work at a more fundamental level.

Grassroots innovation: How a patient-driven device for diabetes won FDA clearance: Full disclosure - this development/product/story is work-related for me and that’s how I found out about it - regardless, the idea that a DIY community could grow up around a life-saving device is just amazing to me. Follow-on piece - Deka’s automated insulin delivery system, powered by patient-led app, gets FDA clearance.

Wells Fargo Fires Over a Dozen for ‘Simulation of Keyboard Activity’: What gets me about this story is that they don’t seem to have been fired for lack of productivity but for the unethical act of lying about if they were working.

Cognigy lands cash to grow its contact center automation business: This is one of those stories where you need to think about the call center as an analogy for any system that requires contact with customers/clients/users/learners whatever you call the people you deliver value for. Really the distance between those various populations is the training data set. “So what sets Cognigy apart? For one, the platform can be deployed either locally or in a private or public cloud (e.g., AWS). And it’s scalable; Cognigy manages AI agents that can handle up to tens of thousands of customer conversations at once.” > > Now do you see what I mean when I say that what can be automated, will be automated and to a higher degree of quality and at a lower cost than ever before? (seriously, I say that ALL the time ;-))

Tektonic AI raises $10M to build GenAI agents for automating business operations: Good for them. I think this is probably the fastest moving space in the AI world right now - the creation of agents to automate work. Remember back when we used to say “if a robot can do your job, it will?” We should change that from robot to AI agent. There will be tons of innovation in this space as the UXs that we see will use natural language more and more to sit on top of specifically trained LLMs and the process of connecting them to existing org systems (HRIS, LMS, etc) gets easier and simplified. Of course, those advances will bring their own challenges. Welcome to the new world.

Is psychological safety being weaponized?: Incoming pet peeve: Can we PLEASE stop saying "weaponized"???!!! I used to work in/for DoD - we weaponized stuff. It's now become shorthand for either something being used in a way I don't like or to indicate a failure of design. Just let's cut back on dropping truth bombs, firing shots across the bow, and in general using the language of war to describe that which is most certainly not war.

Relationship between sense of coherence and depression, a network analysis: Wow. Almost as if community and purpose are important to mental well-being (don’t mean to sound snarky - this is critical work). So how could you see your company deploying this idea internally to help employees maintain better mental health? > > “The “sense of coherence” incorporates the notion that when life seems comprehensive, manageable, and meaningful for an individual, even under tremendous adversity, this accounts for stamina and confidence. Its absence is associated with mental health problems, including depression. The current analysis aimed to explore the relationship between the sense of coherence and depression through a network analysis approach in a sample of 181 people with depression.”

Microsoft is killing off GPT Builder in Copilot Pro for consumers, just three months after broad availability: Almost like the market is changing so rapidly now on both the business and technical fronts that you probably should not be signing long-term (12 month) contracts.

This Hacker Tool Extracts All the Data Collected by Windows’ New Recall AI: Who didn’t see this happening?

In “This is NOT the Way” News:

US sues Adobe for ‘deceiving’ subscriptions that are too hard to cancel: “When customers do attempt to cancel, the DOJ alleges that Adobe requires them to go through an “onerous and complicated” cancellation process. It then allegedly “ambushes” customers with an early termination fee, which may discourage customers from canceling in order to avoid the fee. The DOJ alleges this is a violation of federal laws to protect consumers.” See also this for more news on how to piss off your customers: Sonos draws more customer anger — this time for its privacy policy: “Sonos has made a significant change to its privacy policy, at least in the United States, with the removal of one key line. The updated policy no longer contains a sentence that previously said, “Sonos does not and will not sell personal information about our customers.” That pledge is still present in other countries, but it’s nowhere to be found in the updated US policy, which went into effect earlier this month.”

First Came ‘Spam.’ Now, With A.I., We’ve Got ‘Slop’ - A new term has emerged to describe dubious A.I.-generated material: “Slop, at least in the fast-moving world of online message boards, is a broad term that has developed some traction in reference to shoddy or unwanted A.I. content in social media, art, books and, increasingly, in search results.” > > The AI version of pink slime.

Publishers Target Common Crawl In Fight Over AI Training Data: “Although Common Crawl has been essential to the development of many text-based generative AI tools, it was not designed with AI in mind. Founded in 2007, the San Francisco–based organization was best known prior to the AI boom for its value as a research tool.” > > What’s amazing to me is how fast we’re seeing all of this foundational work that’s powering the AI boom get uncovered.