Heat Map: Collective Intelligence (humans + AI), two big thinkers, and organizational implications

So I think I’ve mentioned before that I tend to see things in terms of heat maps. How does that work? I look at a LOT of information on a daily basis. In my Link Roundups, I tend to pass some of those items along with some commentary. There are times though when I just start seeing so many stories with the same focus that it becomes a hot spot. I’m aware that what gets my attention here is largely the result of what comes in from the sources I follow, so my maps will be different than others and I’m not trying to assert any kind of universality to my observations. Phew. That being said…..

Two things have risen to become hot spots for me this week - agentic architectures and human/AI collective work. I actually already wrote about the agentic piece here, Agents, and Architecture, and Access - oh my!. The tl;dr there is that let’s say your company uses MSFT and Salesforce. That means that you already have two services in-house that can deploy agents (little but smart bots/AIs) to complete certain tasks within the org. That means that someone in your org should be looking at how those two services can work best together and who gets to create and deploy agents and what jobs they can do and how will they affect the infosec of the org and on and on. I think agents can be of great help but I think doing so in a productive way will require thoughtful consideration of all the dynamics above and then some.

The other piece that I really want to get into here could be classed under the heading of “collective intelligence” - and in this sense, I’m using that to mean the collective between AI and humans. The two thinkers I follow here are Ross Dawson and Gianni Giacomelli. Here are some of things I’ve been seeing from these two big brains:

(link) “Researchers created a knowledge graph based on 58 million journal papers to fuel personalized research ideas for scientists. Over 4400 ideas generated by the system SciMuse were evaluated by 100 research group leaders. They were then able to predict with strong accuracy which of its ideas would be ranked most interesting, using a couple of different approaches. The greatest future potential for science lies in interdisciplinary collaboration. Tools such as SciMuse provide massive potential for novel and unexpected research ideas that might not otherwise come to the surface. SciMuse (paper link) were evaluated by 100 research group leaders.” > > LOTS of questions for how humans and AI can work together here. The simplest way of thinking about it for me, is if you had an army of researchers who didn’t sleep or eat and who just read stuff and looked for connections 24/7. I think this opens up a vast field of adjacent possible discoveries but we will still have to think about what changes will have to happen in our orgs to effectively evaluate all the new ideas and then how to move them into production.

(link) “New research indicates that some form of collective intelligence of AIs works well at higher scale than in humans. In an experiment by Giordano De Marzo, 1,000 separate AIs converged in their debates (humans would generally struggle with that number, being bound to something close to the so-called Dunbar number, which is around 150). (study link)” I think here again, we’re confronted with the potential of AI and humans to work together but the challenge will be to shift our org structures in a way to make the best use of the scale and speed that that collective work will bring us.

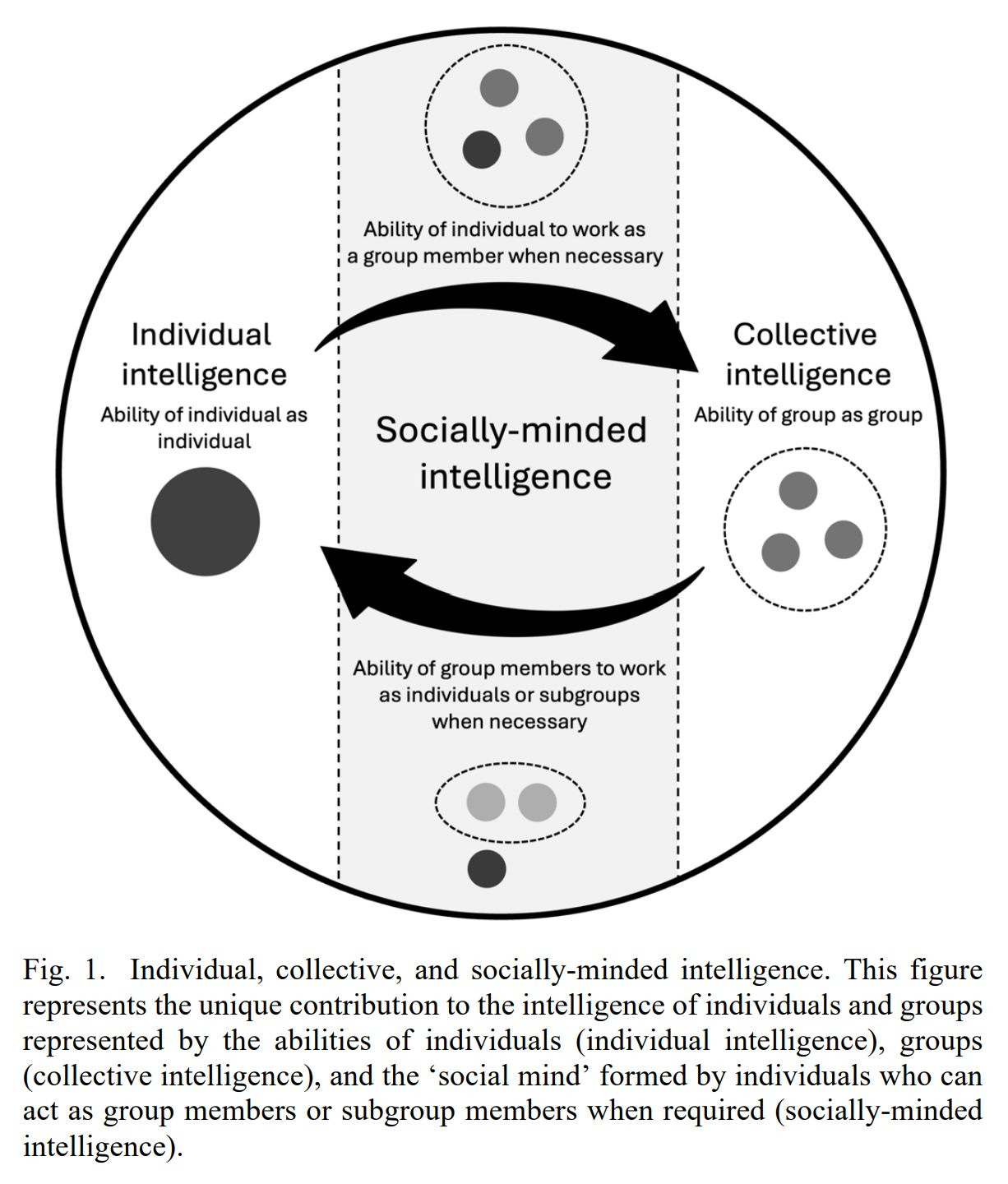

(link) > > the really cool part is when these two start adding context to the other’s posts. Here Gianni’s adds some context to Ross’s post on a paper that posits that "A multi-agent system’s group socially-minded intelligence is the extent to which each agent in the system can perceive, think, and act as a group member, subgroup member, or individual — depending on the demands of the context — and can act accordingly to work towards the system’s superordinate goals…the capabilities we have outlined may be useful for designing AI agents that can better respond to changes in the social situation that might lead a human teammate to act more as an individual or as a group member. For example, socially-minded agents could estimate when they should work with a human teammate or work autonomously."

Ross argues, and I agree, that we are “shifting wholesale into a Humans + AI world.” IMHO, we need to stop talking about IF this will happen and start working on HOW we will make it not just productive but inclusive. There are implications here for hiring, team building, internal comms and collaboration and my fear is that our org structures are nowhere near the level of sophistication that this tech is.

So, what to take from all this? I’ve made the point before and will again, that the new features you see released with each new version of whatever AI you’re looking at, are largely meaningless. Not because they don’t add to the layer of possibilities that we have available to us but because the already released features already outstrip our ability to make use of them at the team/org level.

While we should continue to experiment with the new tech, these articles point toward something else - that considering how we could build orgs and teams to work with and integrate AI from the ground up, is the real way that we can unlock the potential here. It’s those orgs that do this work, the hard work of thinking about how to organize teams, workflows, etc. are the ones who will hit that moment of inflection first and leap furthest ahead. Otherwise, we’re just giving lasers to cavemen (organizationally that is).